Most hunts start from zero. Teams scatter notes across Google Docs, Jira tickets, and Slack threads. Analysts forget what they hunted last quarter, let alone last year. They fall back to keyword searches and hope for the best. When they bring in AI, they copy and paste into ChatGPT and get answers that have no awareness of their environment. This approach works, but it is slow, chaotic, and manual.

Meet the Agentic Threat Hunting Framework (ATHF) that changes this approach by introducing structure. You follow the same pattern in every hunt. You record every lesson. You make every hypothesis searchable. AI tools use that structure to recall and reason across your entire hunting history instead of guessing.

For hunters using the PEAK framework, ATHF builds on the foundations of how to hunt by giving you structure, memory, and continuity. PEAK guides the work. ATHF ensures you capture the work, organize it, and reuse it across future hunts.

Once you add that structure and continuity, you still need a shared workflow. You need a consistent loop that both humans and AI can follow without drifting or reinventing steps.

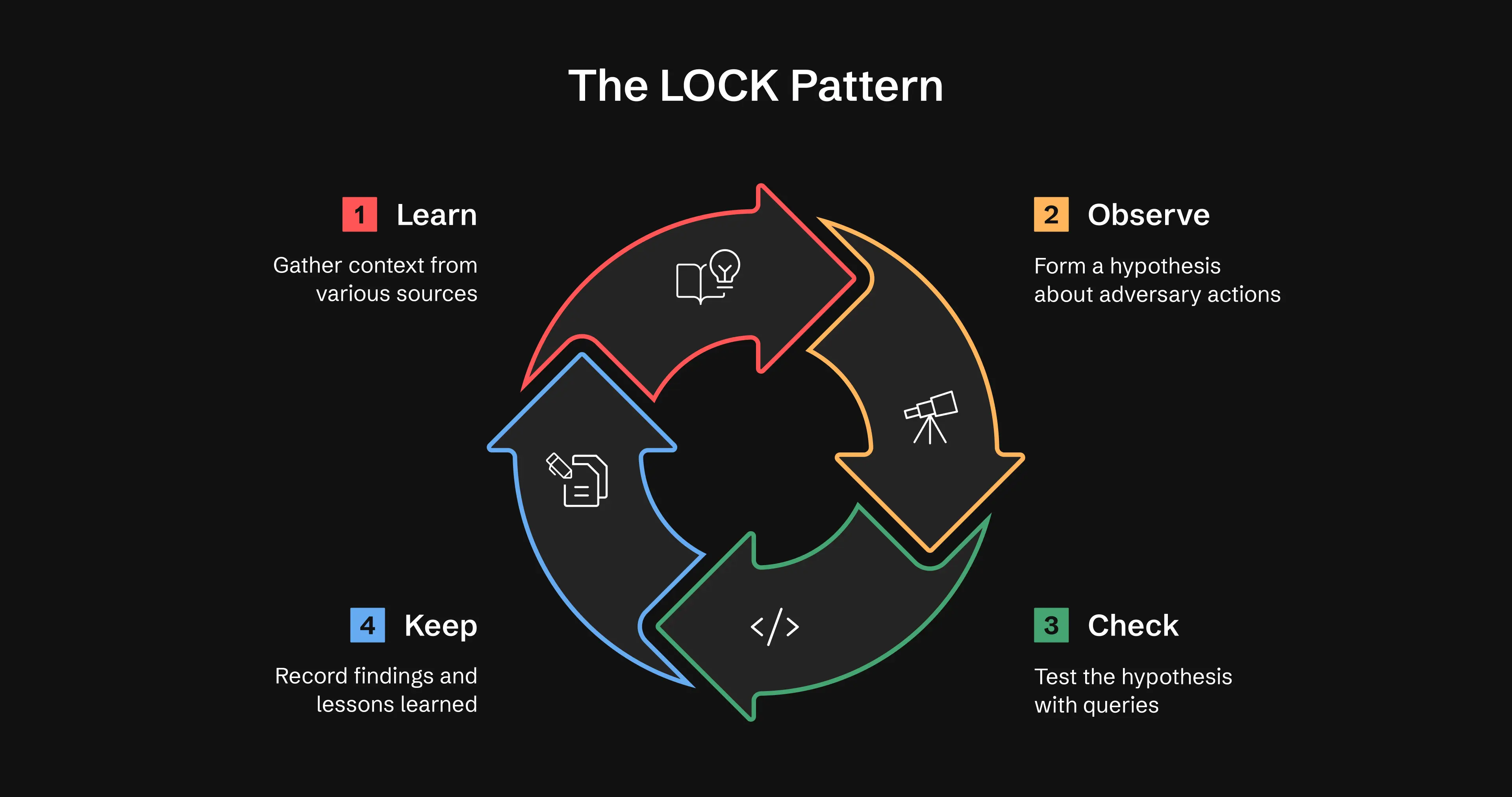

The LOCK Pattern

Learn → Observe → Check → Keep

Most hunting programs stall because every analyst starts each hunt differently. Humans improvise and AI improvises, and that’s when context breaks.

LOCK gives your team a single loop to follow. It keeps humans and AI aligned. It reduces repeated work. It turns every hunt into a repeatable process instead of a one-off effort.

Learn

Prepare the hunt. Gather context from cyber threat intelligence (CTI), alerts, and anomalies.

Example: Atomic Stealer and similar macOS information stealers use AppleScript commands to collect sensitive data from infected systems. This behavior maps to T1005 - Data from Local System under the Collection tactic (TA0009).

Observe

Form your hypothesis about adversary behavior and determine what normal and suspicious activity look like.

Example: Adversaries use AppleScript to collect sensitive user data on macOS systems. You can observe this activity when osascript processes run “duplicate file” commands or when non-browser processes access Safari cookie files, Notes databases, or large batches of user documents. Adversaries typically stage the collected data in temporary directories such as /tmp or /var/folders within short timeframes.

Check

Run and test your hunting queries.

# Search for osascript or AppleScript processes with suspicious file operations

index=edr_mac sourcetype=process_execution

(process_name="osascript" OR process_name="AppleScript")

(command_line="*duplicate file*" OR command_line="*Cookies.binarycookies*" OR command_line="*NoteStore.sqlite*")

| stats count by _time, hostname, user, process_name, command_line, parent_process

| sort -_time

Keep

Record what happened, what you found, and what you plan to do next.

This hunt reviewed macOS data collection activity (T1005) across seven days. We analyzed 500,000 process execution events and 2 million file operations. We identified one confirmed instance of information-stealing behavior on an executive’s system, MAC-EXE-042. We confirmed the hypothesis when an unsigned binary ran AppleScript commands that duplicated Safari cookies, Notes databases, and filtered documents into /tmp. The malware attempted to exfiltrate the data to an external IP address. Our detection system flagged the activity before the exfiltration completed. We caught the data theft during the collection phase.

You can read the full breakdown in the Github repository.

LOCK stays lightweight for humans and structured for AI. Without LOCK, every hunt turns into a maze of tabs. With LOCK, every hunt adds to the memory layer your team can reuse.

LOCK gives a consistent rhythm. The maturity model shows how teams scale that rhythm across their entire program.

ATHF Functions as a Workflow Engine

Many teams start with markdown only. Once they want consistency, repeatability, and automation, the CLI (command line interface) becomes the backbone of their program.

ATHF provides a markdown framework and a CLI that manages hunts, enforces structure, validates content, and makes your investigations accessible and reusable.

The CLI turns ATHF from static documents into an operational system.

Why the CLI Matters

The CLI keeps your program consistent. It eliminates the overhead that slows or breaks hunting programs:

- Inconsistent file structure

- Missing metadata

- Forgotten follow-ups

- Untracked ATT&CK coverage

- One-off formatting choices

With the CLI, you create, track, validate, and measure hunts the same way every time.

The CLI Workflow

- Initialize your workspace

- Create a new hunt

- Validate your hunts

- Track ATT&CK coverage

- Use AI assistants with your repo

The Five Levels of Agentic Threat Hunting

Every hunting program sits somewhere on a spectrum that ranges from fully manual to AI-supported, multi-agent workflows. ATHF organizes that spectrum into five levels. Each level increases capability as structure and memory improve. Teams can move through these levels at their own pace. Advancement is optional, and each step provides value on its own.

Level 0

Analysts work manually. Hunts hide inside ticket queues or spreadsheets.

.gif)

Level 1

Analysts document hunts using LOCK structured markdown files. You gain reliable history and repeatable knowledge transfer.

.gif)

Level 2

AI assistants read your repo. They search past hunts, recall patterns, and recommend next steps.

Key context files make this work:

- AGENTS.md for environmental details

- knowledge/hunting-knowledge.md for threat hunting expertise

- AI assistants support the CLI natively and will use

athfcommands automatically when interacting with your hunt repository.

This is where your assistant stops guessing and starts reasoning with your actual hunting history.

Level 3

AI connects to your tools through MCP servers, It runs queries, enriches results, and updates hunts.

Examples:

- Run SIEM searches in Splunk, Elastic, or Chronicle

- Query EDR telemetry from CrowdStrike, SentinelOne, or Microsoft Defender

- Create tickets in Jira, ServiceNow, or GitHub

- Update hunt markdown files with findings

.gif)

This shift replaces “copy and paste between five tools” with “ask and validate.”

Level 4

Multiple agents operate with shared memory. They monitor CTI feeds, detect new threats, draft hunts, validate hypotheses, run queries, and notify you.

You approve and refine instead of rebuilding the hunt from scratch.

Getting Started

You can adopt Level 1 in a day and reach Level 2 in a week.

- Clone the repo.

- Document hunts in LOCK format.

- Add context files when you have a base set of hunts.

- Use an AI assistant that can read your repo structure.

- Expand into CLI and MCP integration when you are ready.

You choose the pace. You choose the tools. ATHF fits the environment you already have.

Empower Your Analysts

Agentic threat hunting is not about replacing analysts. It is about building systems that:

- Remember your work

- Learn from your outcomes

- Give AI the context it needs

- Preserve institutional knowledge

- Strengthen repeatability across your program

When you stop losing history, you gain real leverage. When AI can recall past hunts, it becomes a force multiplier. Memory is a multiplier. Agency is the force.

Explore ATHF

The full framework, templates, example hunts, and CLI are available here.

ATHF is open source and ready for real environments. Try it, adapt it, and share your findings. Feedback and contributions are always welcome.

Happy hunting!

Subscribe Now

Get the latest Nebulock news direct to your inbox